|

| 1 | +# Kosmos-2: Multimodal Large Language Model and OpenVINO |

| 2 | + |

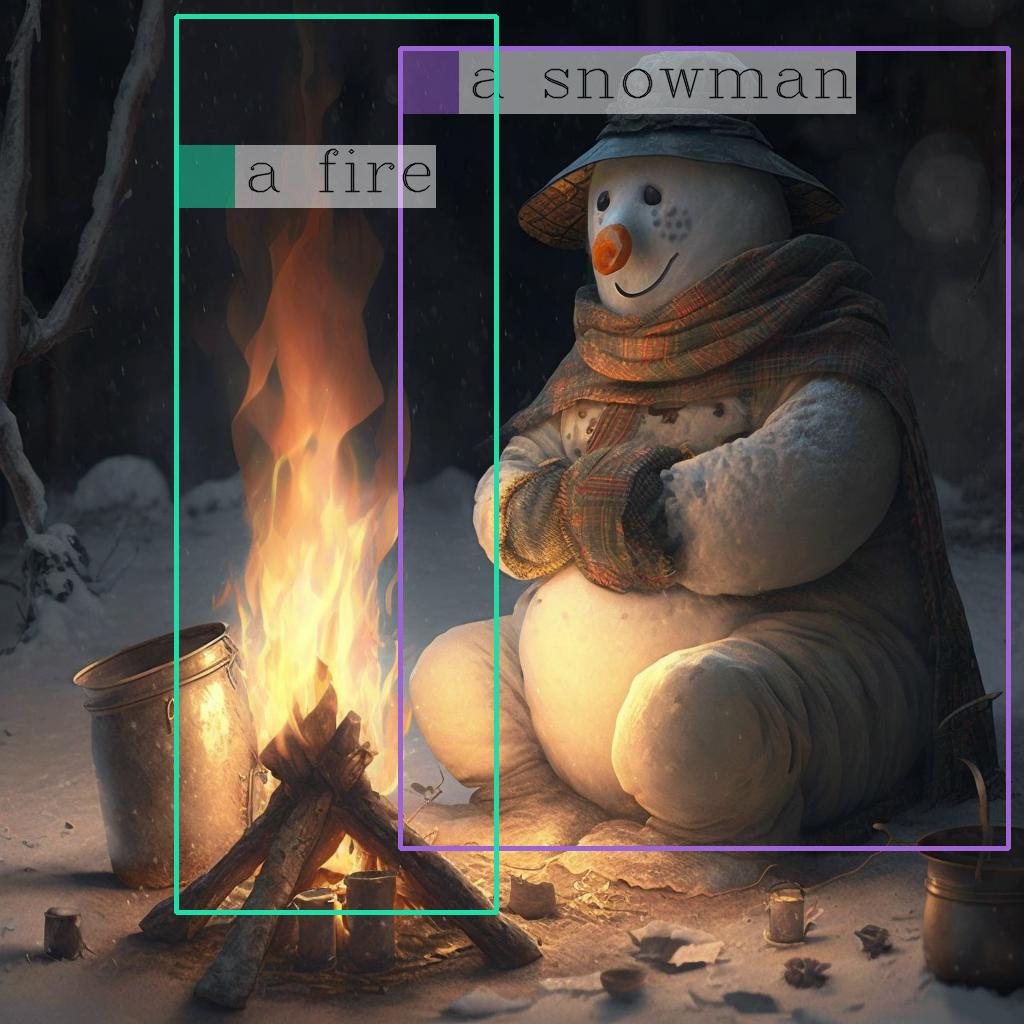

| 3 | +[KOSMOS-2](https://github.com/microsoft/unilm/tree/master/kosmos-2) is a multimodal large language model (MLLM) that has new capabilities of multimodal grounding and |

| 4 | +referring. KOSMOS-2 can understand multimodal input, follow instructions, |

| 5 | +perceive object descriptions (e.g., bounding boxes), and ground language to the visual world. |

| 6 | + |

| 7 | +Multimodal Large Language Models (MLLMs) have successfully played a role as a general-purpose interface across a wide |

| 8 | +range of tasks, such as language, vision, and vision-language tasks. MLLMs can perceive general modalities, including |

| 9 | +texts, images, and audio, and generate responses using free-form texts under zero-shot and few-shot settings. |

| 10 | + |

| 11 | +[In this work](https://arxiv.org/abs/2306.14824), authors unlock the grounding capability for multimodal large |

| 12 | +language models. Grounding capability |

| 13 | +can provide a more convenient and efficient human-AI interaction for vision-language tasks. It enables the user to point |

| 14 | + to the object or region in the image directly rather than input detailed text descriptions to refer to it, the model |

| 15 | + can understand that image region with its spatial locations. Grounding capability also enables the model to respond |

| 16 | + with visual answers (i.e., bounding boxes), which can support more vision-language tasks such as referring expression |

| 17 | + comprehension. Visual answers are more accurate and resolve the coreference ambiguity compared with text-only |

| 18 | + responses. In addition, grounding capability can link noun phrases and referring expressions in the generated free-form |

| 19 | + text response to the image regions, providing more accurate, informational, and comprehensive answers. |

| 20 | + |

| 21 | + |

| 22 | + |

| 23 | + |

| 24 | +## Notebook contents |

| 25 | +- Prerequisites |

| 26 | +- Infer the original model |

| 27 | +- Convert the model to OpenVINO IR |

| 28 | +- Inference |

| 29 | +- Interactive inference |

| 30 | + |

| 31 | +## Installation instructions |

| 32 | +This is a self-contained example that relies solely on its own code.</br> |

| 33 | +We recommend running the notebook in a virtual environment. You only need a Jupyter server to start. |

| 34 | +For details, please refer to [Installation Guide](../../README.md). |

0 commit comments