-

Notifications

You must be signed in to change notification settings - Fork 107

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hostnames never re-resolved into IP addresses #115

Comments

|

Looks like this would not be straightforward to implement. Would probably need to pass hostnames instead of IP addresses into the server_new, and let the resolution happen at connection-time instead of inside of config_new. The useall option may make this tougher to do. |

|

yes, the design is not really suited for this, need to think about it, don't want zillions of hostname lookups either. |

|

What actually would help/work is to SIGHUP the relay process periodically or when a DNS change happens. It will re-read config, and hence also re-resolve the hostnames in use. |

|

You can emulate this behaviour by SIGHUP-ing the relay, I think that's the best you can get for now. |

|

Collectd went the other way on this issue: collectd/collectd@4a89ba5. This is really inconvenient, i just had to reload my relay for the same issue. |

|

So, if the relay would automagically reload itself every X minutes, you'd be happy? |

|

If it's just a reload then anybody can just cron sighup, it's a workable solution. If anything honoring the TTLs in DNS would be the way to go. It shouldn't be carbon-c-relays job to do the job of nscd or its concern. Thanks for all of your great work on carbon-c-relay btw. |

|

Ah, so you want to have min(TTLs) as refresh interval or something. I see. |

|

In an ideal world I think it should resolve on every connect and let the OS (nscd/dnsmasq/systemd-resolved) do the caching. In the real world many systems don't have DNS caching configured.... |

|

Since we/I have always been using IP addresses (to avoid any resolution whatsoever) that is definitely the configuration for people who don't want any resolution to take place. So, thinking this a bit through:

|

Determine when we don't have explicit IP addresses and re-resolve every time we attempt to connect. This also means that we now try all addresses returned in order instead of just the first entry.

|

There, I guess we'll have to see how that holds up now. :) |

|

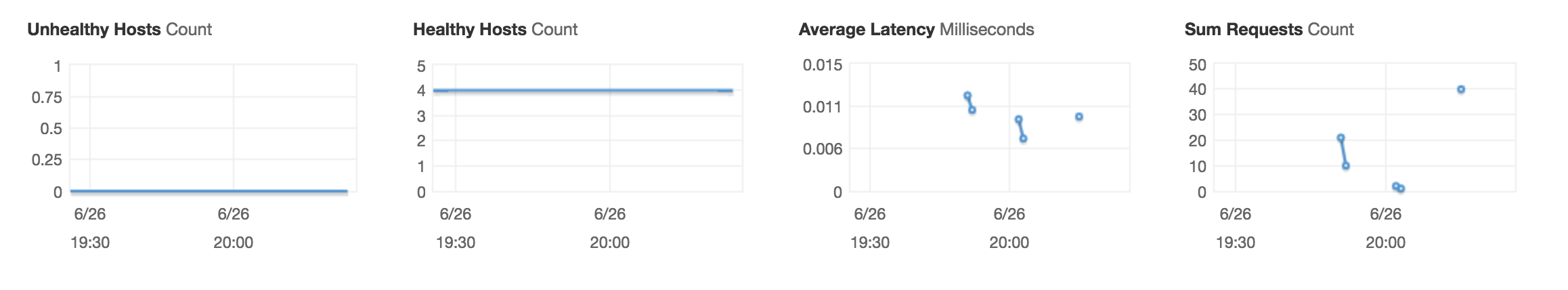

This is still an issue because carbon-c-relay is not reconnecting after ELB backend going down and up again - IP behind ELB stays as before no change on ips and resolve is not changing. For example, I have some telegrafs on auto-scaling-group behind ELB and after nodes replace relays just stop sending data. ELB is just available only as DNS endpoint. When I restarted all telegrafs on relays nodes we can see this On telegraf node After relay restart to restore traffic and no SIGHUP helping. Looks like no reconnect from relay side. Only relay restart help for this situation and this is hard to use at this moment. Used carbon-c-relay version: carbon-c-relay v3.1 (ac8cc1) Part of relay config ELB works in TCP modes on front and backend and idle TCP connection timeout is set to 60 seconds. As you can see relay is making few connections and never closing this connection by itself. |

I'm no expert on ELB but if this does what I think it does (drop connections after 60s no traffic) then this is extremely low. You will at least have to configure all your systems with a lower keepalive_time in |

|

@szibis what is the TTL on the DNS record(s) behind metrics-proxy.monitoring? Also, you specified I think the missing bit here is, that the relay "sees" this as a single target, you used |

|

@grobian for ELB there is no TTL, this is an internal alias in AWS which is immediately updated when its change. Yes, useall could be a problem because when replaced all nodes behind or just AWS change ELB addresses exposed to the public then this could stop working, but this is not an issue in this case because IP's are not changed in this short interval. I need useall because I need to spread long-lived connections through all IP's behind ELB DNS. For me, SIGHUP to re-resolve would be very good option for ELB. From AWS doc: |

|

All I do now is to increase the idle timeout on ELB to maximum 3600 seconds and this only move problem in time but still exist. |

|

Ok, I'm slightly confused then. If the IP's aren't changed, then there is nothing to re-resolve, is it? Re-reading your first comment, you mention the ELB is restarted or something, and carbon-c-relay not re-connecting. However, your post also seems to suggest connections still stay in ESTABLISHED state, e.g. what @piotr1212 said, the OS/relay cannot know the connection is dead, a proper reset wasn't sent. The relay should wait for approx 10 seconds on a write to succeed, after that it backs out with a log message and closes the connection. Do you see "failed to write()" log messages? |

That is unfortunate. You could still lower the keepalive, it won't keep the connection open but the OS will not receive responses to the keepalive and will notice that the connection is stale, and close the socket earlier, not perfect, but better.

It's not very likely there will be no traffic on the connection for longer than 3600 seconds, while not perfect it might work in practice.

I don't think this works when the connection is in established state and the packets are silently dropped. |

|

Nope no failed to write to this hosts. Starting relay: This ip are from ELB after resolve - each ELB is resolving to 4 IP's. We have 3 ELB dns names in relay config. Each ELB all instances active 4 instances on each ELB. Health-check on TCP:2003 telegraf ports in 5 seconds intervals with 3 seconds timeout. On telegraf side process is running and available and ELB health-check are working with telegraf. After couple of hours all traffic is going down and all connections from relay through this ELB to telegraf are in state CLOSE_WAIT And after some time on relays: Eventually all connections will be in CLOSE_WAIT state. And some tcpdump to this CLOSE_WAIT: |

|

After on telegraf host and other telegraf host But somehow after couple of hours even this SIGHUP from cron every 3 minutes not working but i need to confirm this leaving this to late today. |

|

After all 9 relays restart on telegraf side: All is working for some time just like in screen from ELB cloudwatch console graph. |

|

I may be a bit slow here, but can you describe how your architecture looks like? I see relays -> ELB, and relays -> telegraf, you also mention telegraf -> ELB, how does it work, who talks to who? |

Currently, the problem exists in first flow. |

|

I'm a bit confused about how your architecture exactly is. For flow 1: |

|

Yes, relay is sending traffic to ELB and behind this ELB I have a cluster of telegraf auto-scaled by ASG based on spot instances.

This is the only indicator of ELB name

When an instance fails, then it is starting and ASG adding this new instance to ELB.

Yes, it happens when any telegraf goes down, restored by ASG or just when I restart telegraf daemon. If I do this on all telegraf instances then traffic going to CLOSE_WAIT on all carbon-c-relay connections to this ELB IP's.

Yes, IP doesn't change and domain of ELB is newer changing. My question is why carbon-c-relay making only one connection per IP? I have similar problems with diamond and graphite handler or influxdb handler. Closing connections then reconnecting solve this. In graphite every number of seconds in influxdb handler just every n sent batches of metrics. But maybe this is a different issue. |

|

Ok, so it seems the ELB does weird stuff (IMO). Apart from that I don't know if it loadbalances at all (c-relay only makes 1 connection to each IP), it seems not to reset connections that are forwarded. I'm wondering whether it would be possible to have the IPs of the telegraf nodes in the carbon-c-relay config (DNS useall would be fine). This way, SIGHUP will update the IP list if it changes in DNS, and the relay will use ALL telegrafs because it will connect to ALL of them, instead of making just a single connection to the ELB. It probably is also able to detect write errors in this case, which makes it fail over. |

|

@grobian and there is the second part of the issue I do test it and when I have more than 15 or 20 (i don't remember right now but we can test it one more time) telegraphs defined as any_of then when I add more I do have buffer overflow in carbon-c-relay - tested on 3.1 And when I use telegrafs direct then i can use them with static IP's without any DNS, but this scenario need more testing. |

|

I'd be very interested in the buffer overflow. |

|

I have an issue related to this. I would like to use a simple forward cluster with a couple of carbon-cache nodes, simply for ha. I use consul and a DNS on top of it, so whenever a new carbon-cache server gets discovered, consul adds this new carbon-cache to the DNS response. The thing is, if you write the useall, the resolution is done at 'reading config time' thus you wont notice changes in the infrastructure. If you skip the useall, you get resolution but the metric is only sent to one of the carbon-caches, I want to re-resolve and also sent it to every server. Is there a way of doing this? |

|

this is currently not possible, it requires a complete config reload |

|

Ok, I expected that. Thanks for the answer either way, and for carbon-c-relay! |

|

Just to clarify, I think that the current design of the router cannot cope with "dynamic" clusters like this. There are likely too many assumptions that the cluster composition is static in terms of size. Re-resolving works out fine per destination, but re-creation of an entire cluster requires a reload of the entire config. It could be possible perhaps to trigger config reload on something different than SIGHUP, e.g. config file change (mtime) or a watched dns record change (via TTL, or something, don't know). Such implementation would be somewhat expensive since it would lock the relay down on every actual config change (like reload does currently). |

I had a cluster of a few relay instances pointing to an amazon aws ELB instance. I have a temporary cluster running to tee traffic from our clients, so that it is sent to an older radar cluster, as well as a newer radar cluster with new hashing scheme and backend nodes.

Over time, AWS changes the DNS CNAMES for an ELB instance to point to new IP addresses, as they do backend restructuring. It seems that carbon-c-relay, though, resolves the IP addresses once, and keeps that until restart. It would be nice if it periodically refreshed the IP list behind the CNAME it is aiming at. Either on every connection, cache it for the DNS TTL, or perhaps only after a specific number of connection errors occur.

My config file is this:

cluster retired

any_of useall oldelb.internal.amazonaws.com:2001;

cluster new

any_of useall newelb.internal.amazonaws.com:2001;

match * send to retired;

match * send to new stop;

The text was updated successfully, but these errors were encountered: