|

| 1 | +# Zero-shot Image Classification with SigLIP |

| 2 | + |

| 3 | +[](https://colab.research.google.com/github/openvinotoolkit/openvino_notebooks/blob/main/notebooks/282-siglip-zero-shot-image-classification/282-siglip-zero-shot-image-classification.ipynb) |

| 4 | + |

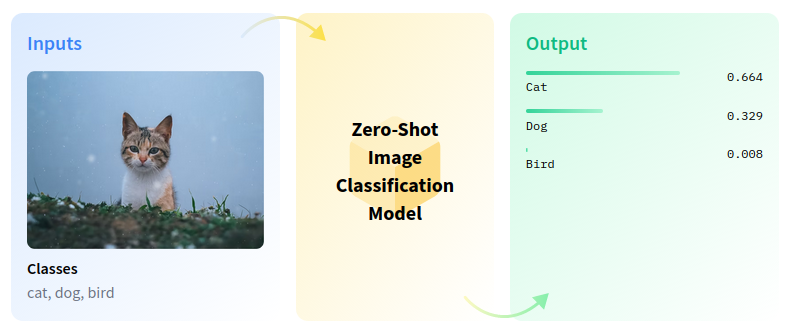

| 5 | +Zero-shot image classification is a computer vision task with the goal to classify images into one of several classes without any prior training or knowledge of these classes. |

| 6 | + |

| 7 | + |

| 8 | + |

| 9 | +In this tutorial, you will use the [SigLIP](https://huggingface.co/docs/transformers/main/en/model_doc/siglip) model to perform zero-shot image classification. |

| 10 | + |

| 11 | +## Notebook Contents |

| 12 | + |

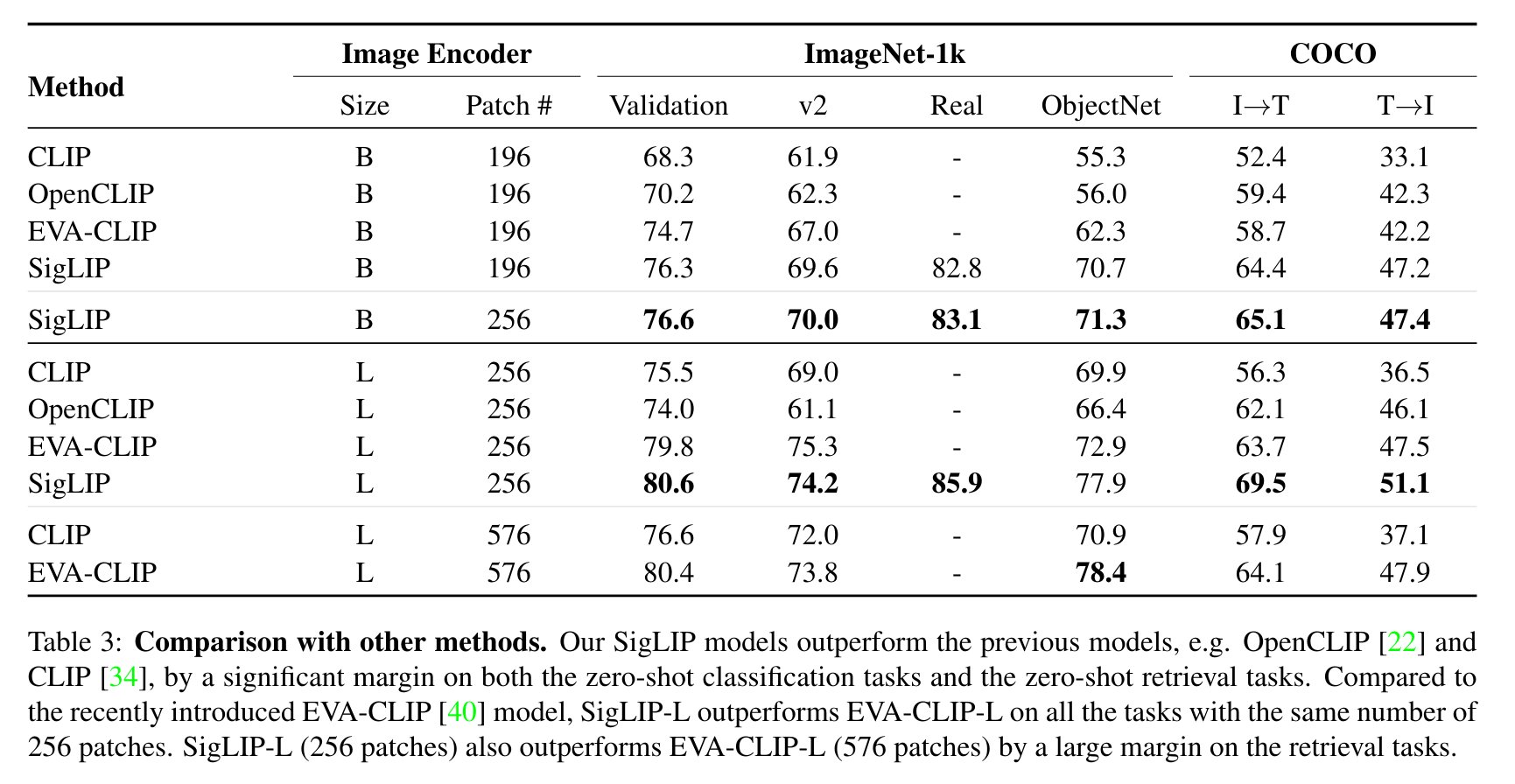

| 13 | +This tutorial demonstrates how to perform zero-shot image classification using the open-source SigLIP model. The SigLIP model was proposed in the [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343) paper. SigLIP suggests replacing the loss function used in [CLIP](https://github.com/openai/CLIP) (Contrastive Language–Image Pre-training) with a simple pairwise sigmoid loss. This results in better performance in terms of zero-shot classification accuracy on ImageNet. |

| 14 | + |

| 15 | + |

| 16 | + |

| 17 | +[\*_image source_](https://arxiv.org/abs/2303.15343) |

| 18 | + |

| 19 | +You can find more information about this model in the [research paper](https://arxiv.org/abs/2303.15343), [GitHub repository](https://github.com/google-research/big_vision), [Hugging Face model page](https://huggingface.co/docs/transformers/main/en/model_doc/siglip). |

| 20 | + |

| 21 | +The notebook contains the following steps: |

| 22 | + |

| 23 | +1. Instantiate model. |

| 24 | +1. Run PyTorch model inference. |

| 25 | +1. Convert the model to OpenVINO Intermediate Representation (IR) format. |

| 26 | +1. Run OpenVINO model. |

| 27 | +1. Apply post-training quantization using [NNCF](https://github.com/openvinotoolkit/nncf): |

| 28 | + 1. Prepare dataset. |

| 29 | + 1. Quantize model. |

| 30 | + 1. Run quantized OpenVINO model. |

| 31 | + 1. Compare File Size. |

| 32 | + 1. Compare inference time of the FP16 IR and quantized models. |

| 33 | + |

| 34 | +The results of the SigLIP model's performance in zero-shot image classification from this notebook are demonstrated in the image below. |

| 35 | + |

| 36 | + |

| 37 | +## Installation Instructions |

| 38 | + |

| 39 | +If you have not installed all required dependencies, follow the [Installation Guide](../../README.md). |

0 commit comments